Introduction

In today’s data-driven world, organizations are constantly seeking ways to make their data more accessible to non-technical users. Traditional SQL queries require specialized knowledge and can be intimidating for business users who need quick insights from their data. Amazon Bedrock Knowledge Base with Redshift integration offers a revolutionary solution that bridges this gap by enabling natural language queries against structured data warehouses.

This article explores how to leverage Amazon Bedrock’s Knowledge Base feature with Amazon Redshift to create intelligent, conversational interfaces that can understand human-readable questions and automatically generate and execute SQL queries against your data warehouse.

What is Amazon Bedrock Knowledge Base with Redshift?

Amazon Bedrock Knowledge Base is a managed service that allows you to create intelligent systems capable of understanding natural language queries and converting them into structured database operations. When integrated with Amazon Redshift, it provides a powerful combination of:

- Natural Language Processing: Understanding human-readable questions

- SQL Generation: Automatically creating optimized SQL queries

- Query Execution: Running queries directly against your Redshift cluster

- Contextual Responses: Providing meaningful answers with proper formatting

Key Benefits

- No SQL Required — Business users query data using plain English

- Instant Self-Service Analytics — Get immediate insights without waiting for technical teams

- Auto-Generated Optimized Queries — Converts natural language to error-free SQL

- Serverless & Scalable — No infrastructure management, handles concurrent users

- Conversational Context — Maintains conversation history and understands follow-up questions

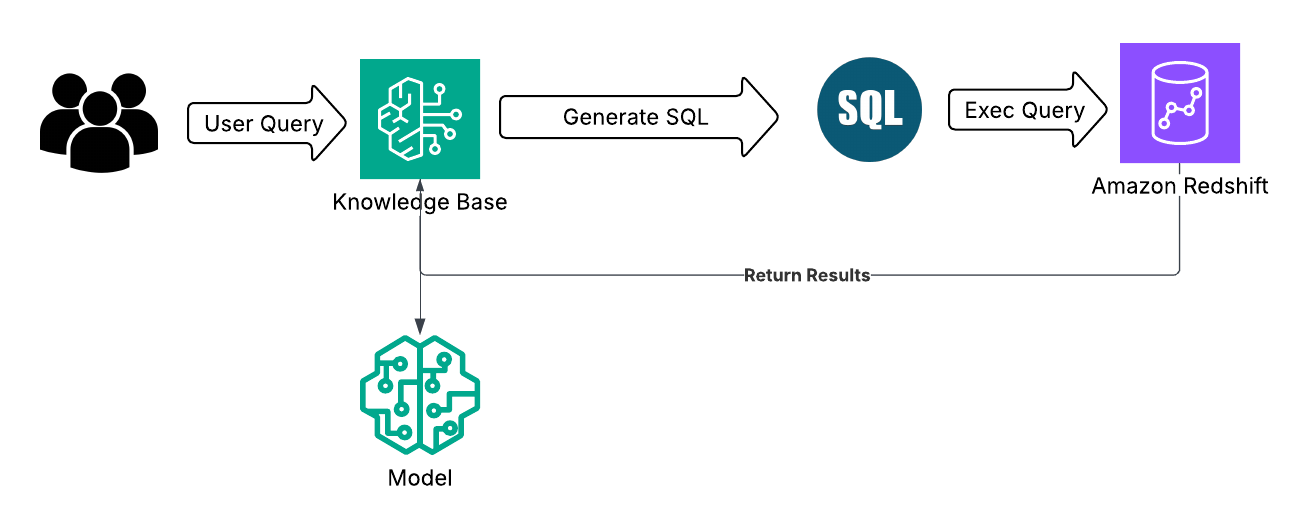

Architecture Overview

The Knowledge Base with Redshift integration follows this architecture:

Implementation Guide

Prerequisites

Before setting up your Knowledge Base, ensure you have:

- An active AWS account with appropriate permissions

- A Redshift cluster with your data loaded

- IAM roles configured for Bedrock access

- Descriptions of the Tables and Column in Redshift

Step 1: Prepare Your Data Schema (Optional but Recommended)

While not strictly required, providing detailed descriptions of your tables and columns significantly improves the Knowledge Base’s understanding of your data structure and query accuracy.

This configuration also allows you to control which tables and columns to include or exclude.

Create a JSON configuration that describes your schema:

# Define table configurations directly for our sample schema

table_configs = [

{

"name": "dev.public.products",

"description": "Product catalog containing item details, pricing, and inventory information",

"inclusion": "INCLUDE",

"columns": [

{

"name": "product_id",

"description": "Unique product identifier",

"inclusion": "INCLUDE"

},

{

"name": "product_name",

"description": "Product display name",

"inclusion": "INCLUDE"

},

{

"name": "category",

"description": "Product category classification",

"inclusion": "INCLUDE"

},

{

"name": "price",

"description": "Current selling price in USD",

"inclusion": "INCLUDE"

},

{

"name": "stock_quantity",

"description": "Available inventory count",

"inclusion": "INCLUDE"

}

]

},

{

"name": "dev.public.orders",

"description": "Customer order transactions with status and fulfillment details",

"inclusion": "INCLUDE",

"columns": [

{

"name": "order_id",

"description": "Unique order identifier",

"inclusion": "INCLUDE"

},

{

"name": "customer_id",

"description": "Customer who placed the order",

"inclusion": "INCLUDE"

},

{

"name": "order_date",

"description": "Date and time when order was placed",

"inclusion": "INCLUDE"

},

{

"name": "total_amount",

"description": "Total order value including taxes and shipping",

"inclusion": "INCLUDE"

},

{

"name": "order_status",

"description": "Current order status: pending, shipped, delivered, cancelled",

"inclusion": "INCLUDE"

}

]

}

]

Step 2: Create the Knowledge Base

Use the AWS SDK or console to create your Knowledge Base with Redshift configuration:

import boto3

def create_knowledge_base():

bedrock_client = boto3.client('bedrock-agent')

# Knowledge Base configuration using correct AWS structure

kb_config = {

"type": "SQL",

"sqlKnowledgeBaseConfiguration": {

"type": "REDSHIFT",

"redshiftConfiguration": {

"queryEngineConfiguration": {

"type": "PROVISIONED",

"provisionedConfiguration": {

"authConfiguration": {

"type": "IAM"

},

"clusterIdentifier": "your-redshift-cluster"

}

},

"queryGenerationConfiguration": {

"executionTimeoutSeconds": 200,

"generationContext": {

"tables": table_configs

}

},

"storageConfigurations": [{

"type": "REDSHIFT",

"redshiftConfiguration": {

"databaseName": "dev"

}

}]

}

}

}

# Create the Knowledge Base

response = bedrock_client.create_knowledge_base(

name="ecommerce-redshift-kb",

description="Knowledge Base for e-commerce Redshift data queries",

roleArn="arn:aws:iam::YOUR_ACCOUNT:role/BedrockKnowledgeBaseRole",

knowledgeBaseConfiguration=kb_config

)

return response['knowledgeBase']['knowledgeBaseId']

# Create the Knowledge Base

kb_id = create_knowledge_base()

print(f"Knowledge Base created with ID: {kb_id}")

Step 3: Query the Knowledge Base

Once your Knowledge Base is created and active, you can query it using natural language:

import boto3

def query_knowledge_base(question, kb_id, session_id=None):

bedrock_client = boto3.client('bedrock-agent-runtime')

# Foundation model ARN (adjust based on your region and model)

foundation_model_arn = "arn:aws:bedrock:us-east-1:001262071246:inference-profile/us.anthropic.claude-sonnet-4-20250514-v1:0"

# Build the request parameters

request_params = {

"input": {"text": question},

"retrieveAndGenerateConfiguration": {

"type": "KNOWLEDGE_BASE",

"knowledgeBaseConfiguration": {

"knowledgeBaseId": kb_id,

"modelArn": foundation_model_arn,

"retrievalConfiguration": {

"vectorSearchConfiguration": {

"numberOfResults": 5

}

}

}

}

}

# Only add sessionId if it's not None

if session_id is not None:

request_params["sessionId"] = session_id

response = bedrock_client.retrieve_and_generate(**request_params)

# Extract session ID from response (new or existing)

response_session_id = response.get('sessionId')

return {

'response': response,

'session_id': response_session_id,

'output': response['output']['text']

}

# Example queries

questions = [

"What are the top 5 customers by order volume?",

"Show me all orders that are currently pending",

"Which products have the highest stock quantity?",

"What's the average order value for products in the electronics category?"

]

# Initialize session ID for conversation continuity

session_id = None

for question in questions:

result = query_knowledge_base(question, kb_id, session_id)

session_id = result['session_id'] # Use session ID for next iteration

print(f"Question: {question}")

print(f"Answer: {result['output']}")

print(f"Generated SQL: {result['response']['citations'][0]['retrievedReferences'][0]['location']['sqlLocation']['query']}")

print("-" * 50)

Here, I added the questions in a list, but this could be extended for use with Amazon Lex or a custom chatbot

Step 4: Session Management with DynamoDB

For conversational interfaces, you’ll want to maintain context across multiple questions. Here’s how to implement session tracking:

Session Storage Strategy:

- Create a DynamoDB table with

session_idas the partition key - Store session metadata, including creation time, last accessed time, and TTL for automatic cleanup

- Optionally store conversation history (questions and answers) for audit trails or enhanced context

This approach ensures that users can have natural, contextual conversations with your Knowledge Base while maintaining proper session isolation and automatically cleaning up old data.

Enhanced Context with Curated Queries

To further improve the query engine’s ability to generate accurate SQL queries, you can optionally provide curated query examples in your generationContext. This helps the system understand common query patterns and business scenarios specific to your data.

"queryGenerationConfiguration": {

"executionTimeoutSeconds": 200,

"generationContext": {

"tables": table_configs,

"curatedQueries": [

{

"naturalLanguage": "What are the top 5 customers by order volume?",

"sql": "SELECT customer_id, COUNT(*) as order_count FROM orders GROUP BY customer_id ORDER BY order_count DESC LIMIT 5"

},

{

"naturalLanguage": "Show me all pending orders",

"sql": "SELECT * FROM orders WHERE order_status = 'pending'"

}

]

}

}

Benefits of Curated Queries:

- Helps the query engine understand your specific business terminology

- Provides examples of common query patterns (aggregations, filtering, joins)

- Improves accuracy for domain-specific queries

- Reduces the need for query refinement iterations

Conclusion

Amazon Bedrock Knowledge Base with Redshift integration represents a significant advancement in making data accessible to non-technical users. By combining the power of natural language processing with the robustness of Amazon Redshift, organizations can democratize data access, reduce the time to insight, and enhance the user experience with conversational interfaces.

The implementation is straightforward, but the impact on organizational productivity and data utilization can be transformative.

References

- AWS Bedrock Knowledge Base API Documentation — Official AWS documentation for creating Knowledge Bases with Bedrock Agent

Author

Noor Sabahi | Senior AI & Cloud Engineer | AWS Ambassador

#Redshift #KnowledgeBase #GenerativeAI #AmazonBedrock #LLM #QueryGeneration