TL;DR — Cursor can 2× your delivery speed if you install hard guardrails. This guide shows how rule files, checkpoint‑and‑restore, test‑driven development, and ruthless pruning let you bank that speed without inflating technical debt.

Why Bother Reading Another AI‑Coding Post?

Most “AI pair‑programmer” articles stop at shiny demos. They rarely answer the question architects lose sleep over: How do we prevent the AI’s optimism from becoming tomorrow’s on‑call fire? Cursor is the first tool that lets engineers bake constraints directly into the generation loop, turning LLMs from creative interns into disciplined apprentices.

After shipping dozens of production deliverables — from RLHF evaluation harnesses to five‑figure SaaS integrations — our team distilled a repeatable recipe that keeps defect counts low and leadership happy:

- Rule Files → codify non‑negotiables up front.

- Checkpoint / Restore → experiment safely.

- Embedded TDD → convert speed into confidence.

- Aggressive Pruning → delete AI drift before it metastasized.

- Ownership Culture → humans stay accountable for every byte that ships.

If you already run a tight engineering ship, these practices will feel familiar — Cursor simply removes friction so you actually follow them.

Lock in Standards with Rule Files

Rule files are YAML manifests that Cursor reads before every generation. Think of them as lint for the model’s imagination. Whenever you ask Cursor to write code, it silently applies your rules as a moral compass, providing consistent guidance across specifc projects or for the whole user.

---

description: "Baseline engineering guardrails for <your‑repo>"

globs:

- "**/*.py"

- "**/*.tf"

alwaysApply: true

---

- always write **pytest** unit tests for new functions

- always write **Google‑style docstrings**

- always add **PEP‑484 type hints**

- update **README.md** when features change

- use **UV** instead of pip for package management

- run **UV** for tests | lint | format

- run **ruff** for linting *and* formatting

- provision with **Terraform** on **AWS**

- expose APIs with **FastAPI** and CLIs with **Click**, both importing a shared *core* package

- prefer *shell one‑liners* over multi‑file bash scripts

- use **Polars** instead of pandas

- choose **deque** / *generators* over lists when order or laziness matter

- use **enumerate** instead of `range(len())`

- validate with **pydantic v2**

- represent constants via **Enum** + `@property`

- consider `__slots__` + `functools.lru_cache` to save RAM and latency

Practical Setup

- Commit Day 0 — Drop the file at the repo root and push before any code exists. This ensures trailing devs inherit discipline automatically. Consider using a cookie-cutter template for repos to enforce this.

- Keep Atomic — “Use UV” beats “Use modern tooling.” AI models comply better with unambiguous verbs. Garbage in, garbage out.

- Evolve via PR — Treat rule edits like production code. A one‑line change can ripple through every future generation. Codify your standards with your team, and keep them up-to-date.

Metric — Rule Violations/PR: Track how many linter errors the rules catch each sprint. A downward error trend proves the contract is working.

Sandbox Fearlessly with Checkpoint / Restore

Cursor’s built‑in checkpoints snapshot your entire workspace, giving you Git‑like safety without context‑switching. Our team uses the following micro‑loop:

Step | Shortcut | Why It Matters

- Checkpoint Establish a rollback anchor before each purposeful change.

- Write / Edit Code — Generate or tweak small units, which we treat as ≤30 lines of code (LOC).

- Run Unit Tests Immediate pass/fail feedback.

- Refine Prompt — If tests fail, guide the model with specifics (“handle empty list”).

- Restore (when needed) Instantly revert hallucinated tangents.

Tips for Effective Checkpointing

- Use New Chat to snapshot, each new chat automatically creates a restore point.

- Name Chat Meaningfully —

feat‑jwt‑middleware‑startbeatscheckpoint‑7.

- Snapshot Before Refactor — Especially when renaming public interfaces; rollbacks become painless.

- Diff Checkpoints — Cursor’s visual diff highlights every line the AI touched, letting humans veto risky rewrites.

This routine lets junior devs experiment without fear while giving seniors the audit trail they need for compliance reviews.

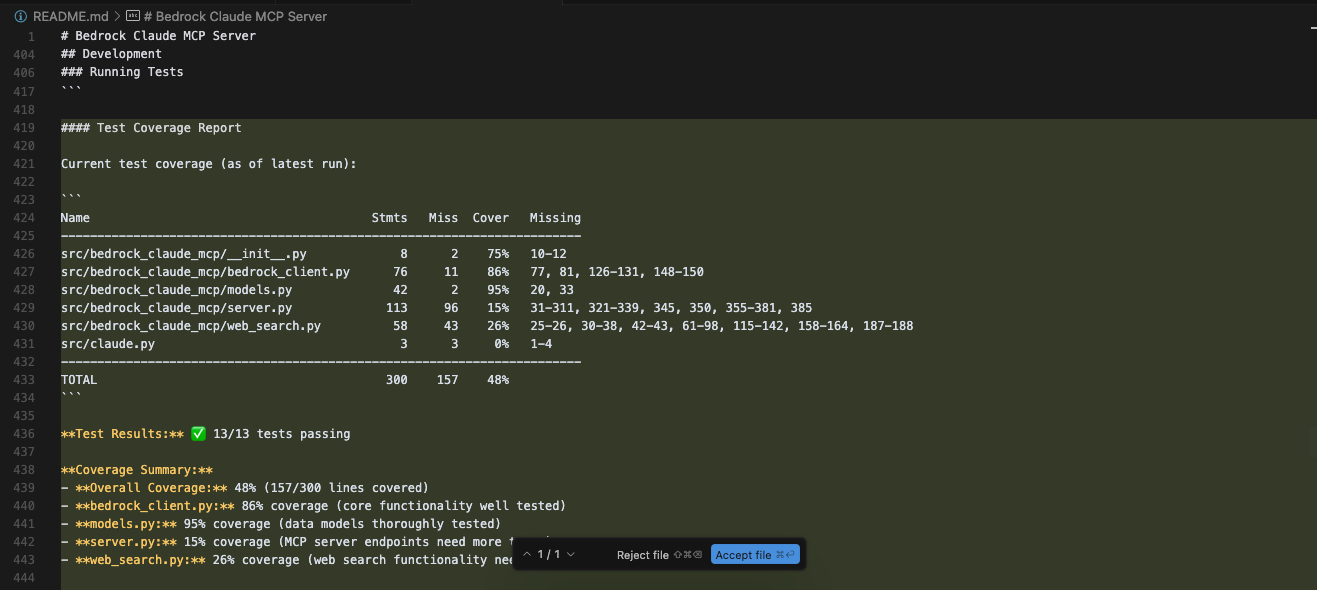

Convert Velocity into Confidence with Pytest‑Centric TDD

Cursor integrates a test runner pane, exterminating the usual excuses that “switching windows slows me down!”.

Red‑Green‑Refactor Inside the IDE

Tweaking the TDD pattern Red-Green-Refactor can efficiently minimize tech debt through helping you compartmentalize your focus.

- Red — Author a failing test that captures the spec.

- Green — Prompt Cursor: “Implement

slugify(title: str) → str; pass tests and follow rule file.” - Refactor — Run

uv fmtandruff --fixuntil the diff is squeaky‑clean.

Automation Hotkeys

Bind these for muscle memory:

Action | Command | Mac Linux/Win Command

Run tests quietly pytest -q ⌘⇧T Ctrl‑Shift‑T

Re‑format with Ruff ruff check --fix ⌘⇧L Ctrl‑Shift‑L

Pro Tip — Ask Cursor to explain a failing assertion in plain English. Models often spotlight edge cases you didn’t foresee.

Coverage Gates

Add a pre‑push hook: pytest --cov=src --cov‑fail‑under=90. Through this, Cursor writes the code, but you own the risk threshold.

Prune Early, Prune Often

LLMs are verbose by design; they hedge by generating helpers, getters, and imports you may never need. Delay pruning for a day and you’ll watch bloat snowball into merge conflicts, which is why you should prioritize pruning early and often.

- Immediate Ruff Sweep —

ruff --select F401,F841 --fix. Kills unused imports & vars. - Collapse Thin Wrappers — Functions that merely forward two args? Inline them.

- Delete Zombie TODOs — Any TODO older than 24 h graduates to a Jira ticket or the trash.

- Run

dead‑code‑detective(open‑source) before release candidates.

A ten‑second purge after each green test saves hours in code review when these items are no longer fresh.

Retain Human Ownership

Cursor accelerates keystrokes; it does not absolve responsibility.

- Mandatory Peer Review — At least one human must sanity‑check every PR against the rule file.

- Documentation First Draft — Let the AI sketch README sections, then polish voice, accuracy, and security notes.

- CI Enforcement — GitHub Actions running UV, Ruff, Pytest, and a custom rule‑file linter. Merge blocked on any red light.

- Track Defect Density — Compare bugs/LOC pre‑ and post‑Cursor adoption. Our team saw → 43 % lower post‑release defects after two sprints.

Culture Shift — Treat Cursor as “a keen junior plus lint bot,” not a senior replacement. Humans retain architectural authority.

Putting It All Together: 30‑Minute Micro‑Sprints

- Plan — Pull a ticket (<2 h estimated).

- Checkpoint.

- Write failing test (red).

- Prompt Cursor to implement (green).

- Prune & format.

- Run coverage; enforce ≥90 %.

- Commit & Push.

Teams adopting this loop record 25–40 % shorter cycle times without slipping quality gates.

Final Thoughts & Call‑to‑Action

Cursor’s true superpower isn’t raw autocompletion — it’s the tight feedback loop between rules and reality. By codifying standards, iterating safely, testing relentlessly, and pruning mercilessly, you convert AI speed into sustainable quality.

Try Cursor out and run a one‑week experiment on a greenfield branch:

- Measure time‑to‑merge, review comments/PR, and escaped defects.

- Compare to your baseline sprint.

- Share your numbers — because the community needs data, not hype.

If your metrics improve, bake the workflow into team onboarding. If they don’t, refine rules and coverage gates until they do. The goal isn’t to worship tools; it’s to ship resilient software at startup velocity.

Interested in exploring this further or implementing a solution tailored to your needs? Contact us at newmathdata.com to discuss technical details, architecture considerations, or integration strategies.