A Practical Playbook for Design, Rollout, and Metrics

TL;DR — Start with a small set of high-value workflows. Use serverless primitives to control cost, MCP connectors for system access, and a Human-in-the-Loop (HITL) gate for risky actions. Ship via IaC + CI/CD. Prove safety and UX in a pilot/UAT, then scale. Instrument everything: errors → Jira, approvals/rejections → policy tuning, and tokens → cost centers.

Why enterprise agents (and why now)

Modern agents can finally move beyond chat and perform real work across calendars, docs, tickets, and admin consoles. The technical ingredients — reliable LLMs, mature APIs, and standards like MCP — exist. What blocks production value isn’t model quality; it’s design discipline: least-privilege access, rigorous approvals for writes, and observability that connects cost to outcomes. This playbook outlines a repeatable path from requirements to rollout, including the metrics necessary to operate the system like any other enterprise service.

Design

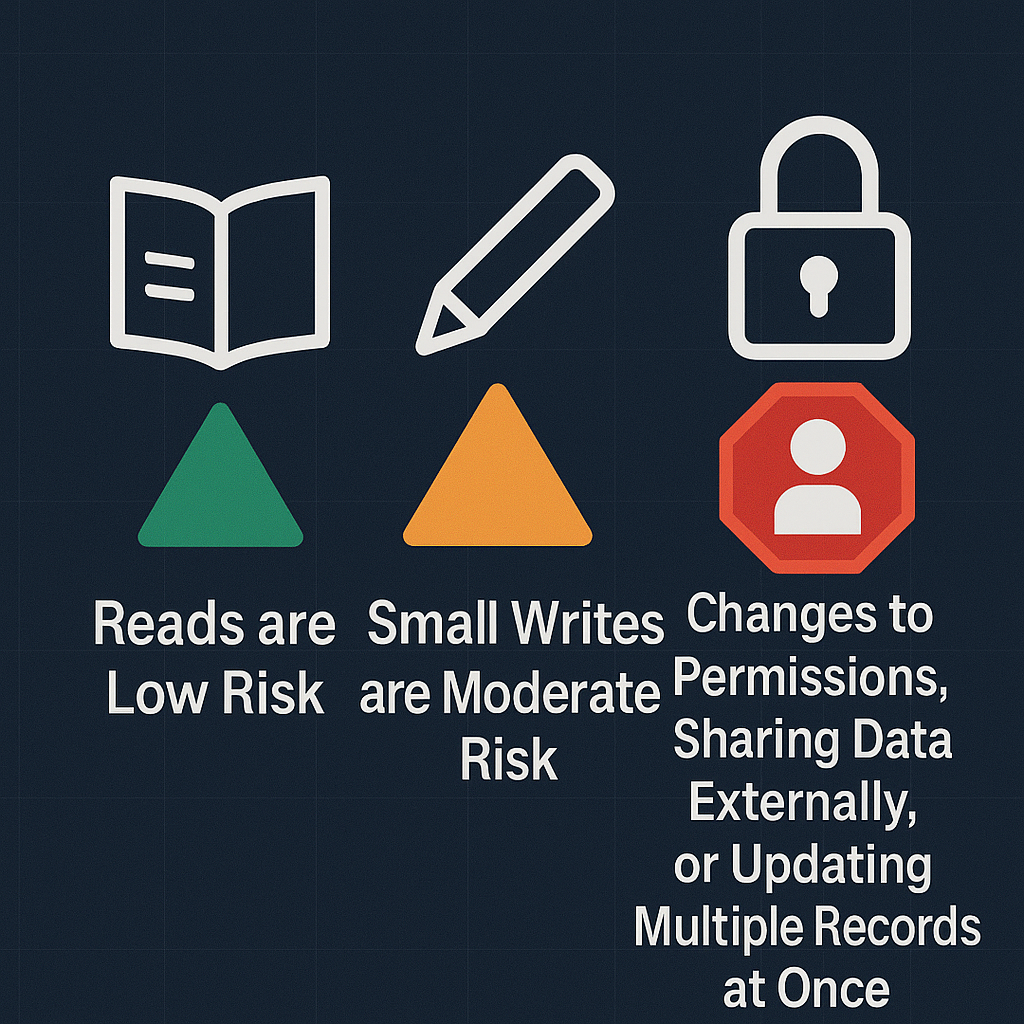

Every durable agent program starts with real stakeholder discovery. Sit with the teams who spend the most time in Google Drive, Calendar, Jira, Google Admin, and similar systems. Catalogue the exact tasks that eat their week — creating documents from a template, scheduling multi-party meetings, moving files to governed folders, updating tickets, or executing routine user-admin actions. Don’t chase novelty; prioritize repetitive tasks with clear inputs and expected outputs. While mapping these flows, classify risks: reads are low risk, small writes are moderate, and any changes to permissions, sharing data externally, or updating multiple records at once should be gated by Human-in-the-Loop (HITL) approval. Define success before you build: the target completion time, acceptable error rate, how often you expect HITL to trigger, and a monthly per-user cost ceiling.

Keep the backbone simple and serverless. Agent traffic is bursty, so pay-per-request services avoid idle cost and naturally constrain blast radius. An HTTP gateway fronts a durable workflow engine that manages retries, backoffs, and waits during HITL. Asynchronous steps run through an event bus and queues, so one slow connector doesn’t stall everything else. Store small amounts of state in a key-value database and keep artifacts in object storage; enforce strict timeouts everywhere. Secrets live in a managed store with rotation and narrow scoping. The rule of thumb is small, idempotent handlers with predictable failure modes and dead-letter queues for the things that still slip through.

On connectors, adopt a Model Context Protocol pattern so each integration is explicit and testable. Every connector declares its capabilities, the exact scopes it needs, and the schemas it accepts and returns. Pre-validators reject requests that don’t match scope or payload constraints; post-validators sanitize outputs and tag data classifications. Rate limits are enforced per tenant, not just globally, to prevent one enthusiastic team from consuming everyone else’s budget. Concretely, Drive can search and read by default; creates and moves are permitted only inside allow-listed folders or with approval. Calendar reads free/busy widely, but creating external events triggers HITL. Jira reads across projects; transitions and bulk edits are limited to allow-listed projects and always prompt for review once the change count crosses a small threshold. Google Admin remains read-mostly; any user or group change goes through approval.

Treat HITL as a user experience, not a safety band-aid. When a policy triggers, the agent prepares a clear diff — what will change, why it’s being proposed, and how to roll it back. Approvers receive a compact card (often in Slack or email) with a one-click approve/reject and a structured reason. Approvals expire so stale context doesn’t slip through a week later. Decisions are logged with timestamps, identities, and the exact policy rule that fired. Over time, you’ll discover classes of changes that are rubber-stamped; remove those from the approval path. The noisy ones will cluster by reason — external domain invites, bulk ticket edits, missing owners — and that’s where you tighten rules, raise thresholds, or improve prompts.

Production from day one means IaC and CI/CD. Infrastructure and policy are versioned, reviewed, and promoted like code. Terraform (or equivalent) owns the gateway, workflows, queues, tables, roles, secrets, and dashboards. Contracts for each connector are tested in CI with mocked APIs and golden responses. Security scanners audit dependencies and infrastructure definitions on every change. Promotion flows from dev to test to prod with manual gates for policy updates. Configuration for models, token ceilings, feature flags, and budgets is centralized so you can change behavior without redeploying everything.

Finally, wrap the design with hygiene: data classification and redaction before anything becomes an embedding or leaves your boundary; role-based access mapped to connector scopes with no “god mode” service accounts; outbound allowlists for web and retrieval so agents don’t wander. Support multiple model providers, but constrain each step with token caps, temperature, and stop sequences. Run evaluation suites for jailbreaks, leakage, and hallucination-prone prompts before you ship.

Rollout

Start with a small pilot that proves both safety and value. Two or three workflows, twenty to fifty users, and two to four weeks is enough. Week one runs read-only in shadow mode so you can observe policy triggers without touching production data; week two introduces writes behind approval. Use the smallest possible scopes for each persona and widen only when you hit a documented block. Publish crisp quick-starts: what the agent can do, what triggers HITL, and how to request new skills. Set exit criteria in advance: at least 80% task success without manual rescue, fewer than 2% surprise approvals, zero unauthorized writes, and a cost that stays within the target you set during discovery.

For enterprise rollout, keep communications short and factual — what’s changing, why it matters, how to use it, and where to get help. Ship one-page persona guides with concrete examples. From an operations standpoint, enable per-tenant quotas and per-connector burst limits, pre-warm critical paths, and shard any hot partitions. Budget alarms fire soft warnings at eighty percent and enforce hard stops at one hundred with an override path. Establish on-call rotations for the platform, each connector, and HITL approvers; set a weekly change window for enabling new write actions or relaxing policy. Require evidence — tests, traces, and a risk note — before you make those changes permanent.

Metrics

Good agent programs live or die by instrumentation. A compact event schema powers operations, finance, and governance all at once. Each step emits the tenant, user persona, workflow, and step name, model identifier, input and output tokens, computed cost, result state (success, error, pending approval, approved, rejected), error codes when relevant, latency, risk score, and (if applicable) approval or rejection reason. A single trace ID ties the orchestrator, connectors, and approval UI together.

With that in place, close the loop on errors by auto-triaging them into Jira. Fingerprint recurrent faults and deduplicate by trace to avoid ticket spam. Dashboards should highlight the top error classes, the workflows they affect, and the time to first fix. Define SLOs by step: reads should succeed at or above ninety-eight percent at P95; post-HITL writes should hold at or above ninety-five percent. Track latency alongside success — slow success is often perceived as failure by users.

For HITL, measure approval rates, decision latency, and the distribution of reasons. Long tails suggest staffing or UX problems; clusters of rejections often indicate a policy that’s too permissive or a prompt that invites ambiguity. Remove approvals for changes that are always accepted, and make it harder to initiate those that consistently get rejected. You are tuning a control surface, not just auditing.

Cost should be attributed per request and rolled up to business functions. Map each workflow to its owning function — Sales, Support, IT — and compute dollars per successful outcome. Add sensible budget guards: monthly caps by tenant with soft alerts and hard stops, plus per-step token ceilings to prevent a single prompt from ballooning unexpectedly. Optimization levers are straightforward: cache prompts and retrieval snippets, tighten context windows, prefer cheaper models for low-risk steps, and batch operations where possible.

Adoption metrics complete the picture. At the user level, watch daily and weekly active usage, tasks completed, time saved versus the baseline process, and interaction with HITL. At the org level, look at workflow coverage, approval latency, avoided escalations, and cost per outcome. Health metrics — queue depth, throttles, error budget burn, and dead-letter age — tell you when to scale or investigate.

A minimal dashboard set keeps everyone aligned. An operations overview tracks success rate, error rate, P95 latency, dead-letter size, and hot workflows. A HITL panel shows pending approvals by age, acceptance rates, top rejection reasons, and approver workload. FinOps summarizes cost by workflow and function, tokens per step, budgets, and anomalies. An adoption view covers DAU/WAU, top workflows, cohort retention, and estimated time savings. All of them should filter by tenant, persona, workflow, and time range — with drill-downs to traces when something looks off.

Common pitfalls (and how to avoid them)

- Vague approvals: “Looks fine” is not a rationale. Enforce structured reasons and capture the triggering policy.

- Scope creep in connectors: start read-only; introduce writes only with explicit allowlists and tests.

- Underspecified metrics: if you can’t calculate cost per successful outcome, you can’t manage budgets.

- Orchestrator as a monolith: keep handlers stateless and small; isolate failures to a single step.

- One-shot prompts: evaluate and iterate prompts like code; add tests for jailbreaks and leakage.

- Human latency: unstaffed approver queues kill value. Track approval cycle times and assign owners.

Close

Enterprise agents succeed when they are boringly reliable: least-privilege by default, approvals where it matters, and metrics tied to business outcomes. Build the rails (serverless backbone, MCP connectors, HITL, IaC/CI), start with small workflows in a pilot, and let data drive policy changes and scale-out. With disciplined design and clear ownership, agents stop being demos and start delivering measurable time savings, safer operations, and predictable cost per outcome.