Clean Code’s Hidden Impact: Unraveling the Python Performance Paradox

Python Performance: Issue 1 – The Polymorphism Rule Welcome to Python Performance Welcome to the Python Performance blog series. In this series, I will be exploring various performance topics in Python, with the aim to create a list of heuristics to help developers write more performant Python code before they ever start thinking about reaching […]

Revolutionizing Data Management in AWS: The Case for Apache Iceberg Over Traditional Table Formats

Introduction In the digital era, where data is king, the choice of table format for data storage and processing is crucial. Common file formats like CSV, Avro, and Parquet have long been the go-to solutions in various data handling scenarios. However, with the evolving needs of big data and cloud computing, newer and more efficient […]

Serverless, Fan-out Architecture Using SNS, SQS, and Lambda

Case Study: AWS re:invent 2023 featured a lab session on building out serverless architecture which utilized SNS, SQS, and Lambda. I found this lab particularly helpful because it helped me design a solution for a problem where data was being throttled through a single chokepoint. In this case, a large batch of data was being […]

Data Engineering Methodology: From requirements to hand-off

Introduction Joining or starting data projects in large enterprise environments with many stakeholders can be stressful, not to mention a technical implementation nightmare. When the primary stakeholders can’t (or won’t) give the project team clear requirements, the onus falls to the technical implementation team to create order from the chaos and organize the delivery team […]

Remote Development in Sagemaker Studio with VS Code

Disclaimer about Changes to Sagemaker Studio As of Nov. 30 2023, there have been major changes to Sagemaker Studio. Existing customers of Sagemaker Studio will get the default experience now called Sagemaker Studio Classic — this is the Studio experience this article was written for. New Sagemaker Studio customers (and existing customers that choose to […]

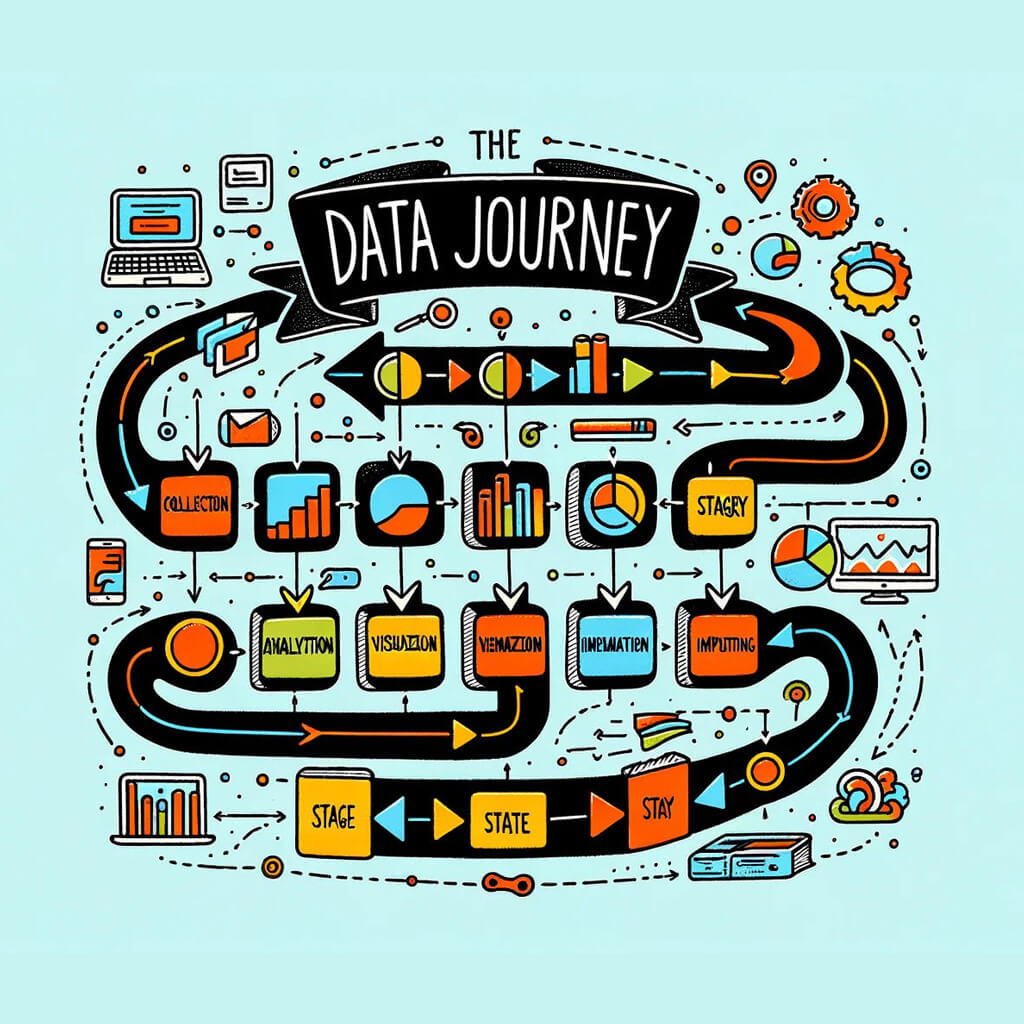

The Data Journey

Many organizations share similar challenges with growing their operational capabilities with data. I have given several talks on data lake design and avoiding the “swampiness” of your data lake, invariably there are various pockets of mess or a “junk drawer” where people hide little bits of critical information. A complex data environment with myriad source […]

Boost AI Fairness and Explainability with Amazon SageMaker Clarify

From hiring decisions to loan approvals and even healthcare recommendations, machine learning (ML) impacts our lives daily. Fairness and explainability are crucial in this context. Fairness means data is balanced, and model predictions are fair across groups. Checking for fairness ensures that negative outcomes are fair across all groups, such as age or gender. Explainability […]

DBT and Databricks part 3: Loading noSQL data (from MongoDB) into Databricks

This series of blog posts will illustrate how to use DBT with Azure Databricks: set up a connection profile, work with python models, and copy noSQL data into Databricks(from MongoDB). In the third part, we will talk about one specific example of how to load noSQL data into Databricks(originally coming from MongoDB). Task: We have […]

DBT and Databricks Part 2: Working with python models

This series of blog posts will illustrate how to use DBT with Azure Databricks: set up a connection profile, work with python models, and copy noSQL data into Databricks(from MongoDB). In the second part, we will talk about working with python models. Starting from version 1.3 python support is added to DBT. As for now […]

DBT and Databricks Part 1: Setting up DBT profile for connecting to Azure Databricks using…

This series of blog posts will illustrate how to use DBT with Azure Databricks: set up a connection profile, work with python models, and copy noSQL data into Databricks(from MongoDB). In the first part, we will talk about how to set up a profile when using dbt-databricks python package. Install python package dbt-databricks using pip […]