Understanding Core Concepts of Agentic AI

A core challenge in Artificial Intelligence (AI) is reasoning under uncertainty. Historically, AI models were used transactionally for well-defined objectives. However, with the emergent knowledge and reasoning capabilities of Large Language Models (LLMs) attained over the last decade, even more complex and open-ended problems can now be solved autonomously.

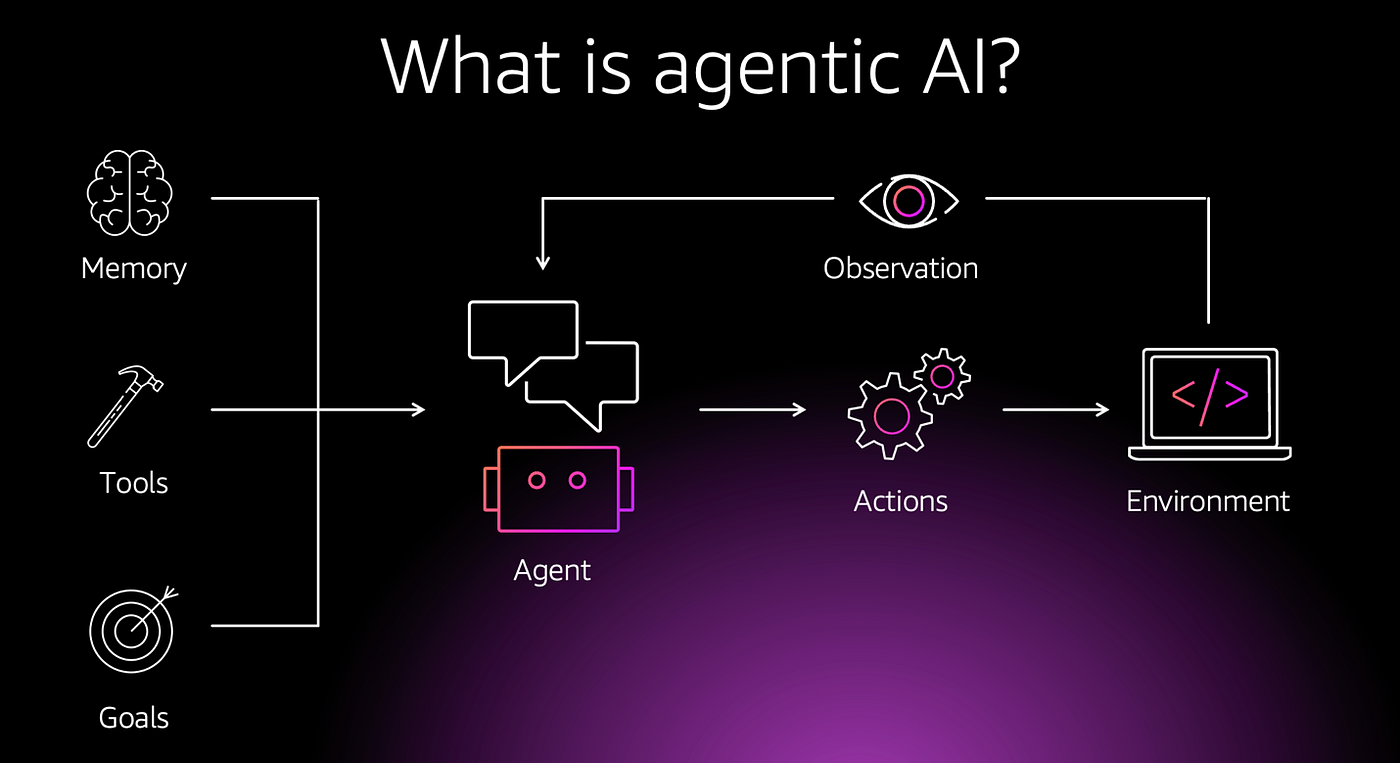

Agentic AI is an emerging subfield building on this foundation. The core distinction is agency: the ability to plan and autonomously interact with environments to execute actions in pursuit of higher-level objectives without relying on human pre-planning and manual oversight.

The remainder of this article outlines several fundamental components of modern agentic AI, offering clarity for readers who may have encountered these terms in conversation but are unsure of their precise definitions or practical roles. These concepts include:

- The context needed to enable autonomous reasoning.

- The use of tools and actions that extend models’ capabilities.

- Orchestration/coordination mechanisms for multi-step reasoning and multi-agent collaboration.

- Standardized protocols for external communication and integration.

- And finally, guardrails and safety considerations to ensure reliability.

Together, these elements form the architectural and operational basis of agentic AI. If you’re a software engineer, engineering leader, or just looking to establish an agentic AI foundation, you have come to the right place!

Context and Memory

Architecturally, LLMs use information provided in their finite context window to generate outputs. Performance often relies on giving the model access to extrinsic information to improve the LLM’s ability to reason about a particular domain/task. This can include user input and template prompts, as well as information retrieved from external data sources (e.g. APIs, databases, files, etc.) or memory.

The context window of an LLM is stateless and typically contains ephemeral information. To overcome this, persistent storage is often used to ensure consistent interactions and access to relevant historical information when needed to solve current tasks.

In agentic systems, the LLM is made aware of these data sources and can have the ability to pull data into the context window on demand, or save new info to memory for subsequent use. Unlike traditional Retrieval Augmented Generation (RAG) pipelines, agentic systems can retrieve and update context dynamically, using reasoning to determine what information is necessary at each step. Adaptive retrieval makes workflows more robust and flexible, often achieved through defined data sources or tools/function calls (depending on the implementation framework).

Tools

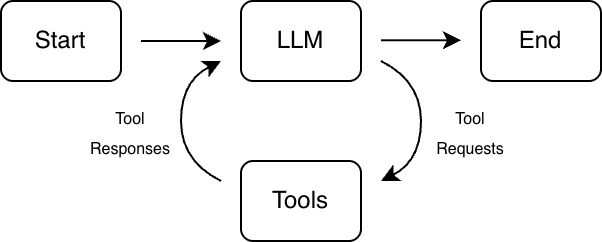

Tools are executable functions that AI agents can invoke during inference. Tools enable deterministic functionality to integrate with the otherwise stochastic processing of LLMs. This ensures business logic and operations are well-defined and guaranteed to execute reliably.

While the exact process can differ, all major LLM API providers, such as OpenAI, Anthropic, or AWS Bedrock, enable developers to define tools and include them with requests. This involves writing a function that the LLM should be able to invoke, and then defining a schema about the tool, including details such as the:

- Name

- Description

- Input parameters (and accompanying types and descriptions)

It is important to note that the better you convey the purpose and description of the tool, the easier it will be for the LLM to invoke it correctly. With well-defined tools in place during interactions, an LLM can now choose to return a structured response indicating it wants to use the tool and the associated arguments. After parsing and calling the associated function, the result can be given back to the LLM in a follow-up API call, to enable continued processing until the final objective is reached.

Furthermore, while tools can be integrated into LLM API calls directly, many frameworks exist (e.g., Strands, LangChain, Bedrock AgentCore, etc.) that can help abstract the effort needed to define schemas and manage the execution loop to iteratively handle LLM API calls.

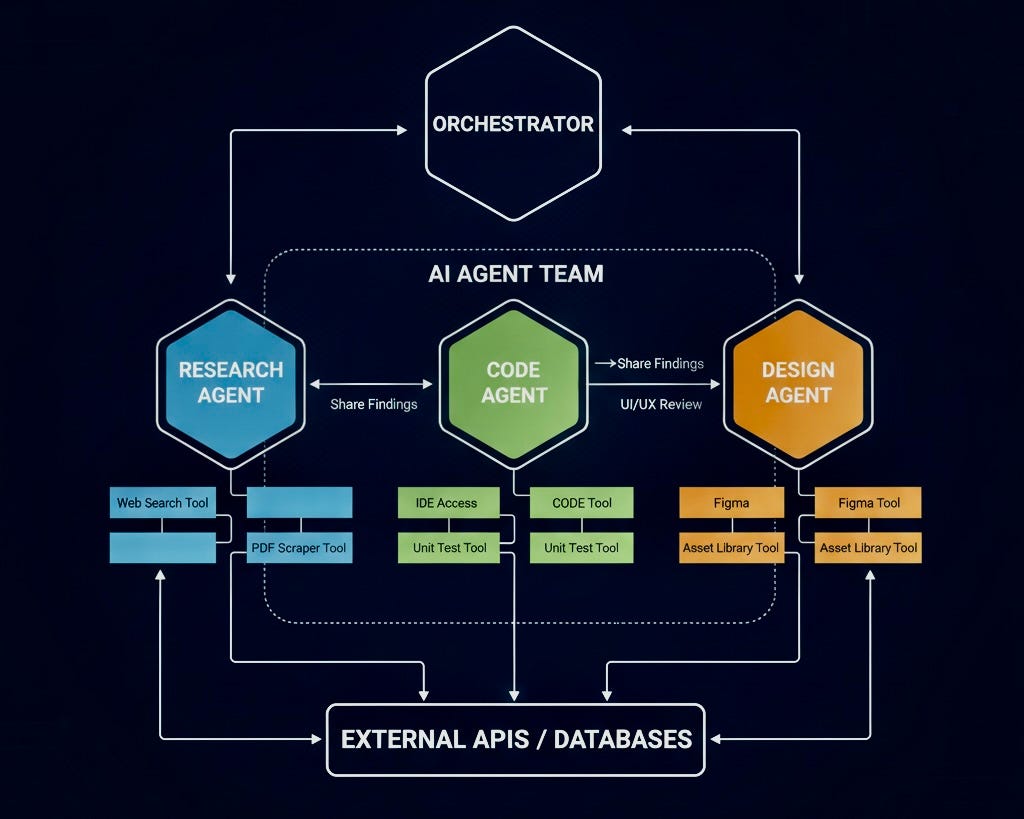

Workflow Orchestration

While agent loops can operate independently, they often function within larger systems, consuming inputs from other components and producing outputs for downstream use.

A workflow is a sequence of tasks executed in a specific order to achieve a goal, serving as an orchestration layer that coordinates constituent components. Workflows may be fully deterministic, fully dynamic (requiring intelligence at each step), or some combination/hybrid of both. Workflows often vary drastically in complexity based on the possible state transitions (branches) associated with a given step, and the logic to determine which branch to take based on intermediate results of preceding steps.

By encoding rule-based logic for specific tasks, workflows can reduce the non-determinism and reasoning burden imposed on the LLM at runtime. In multi-agent architectures, workflows and orchestrator agents can operate at a higher level of abstraction than individual tasks, coordinating sub-agents for structured subproblems. An example of such orchestration is shown in the following diagram:

External Communication & Integration

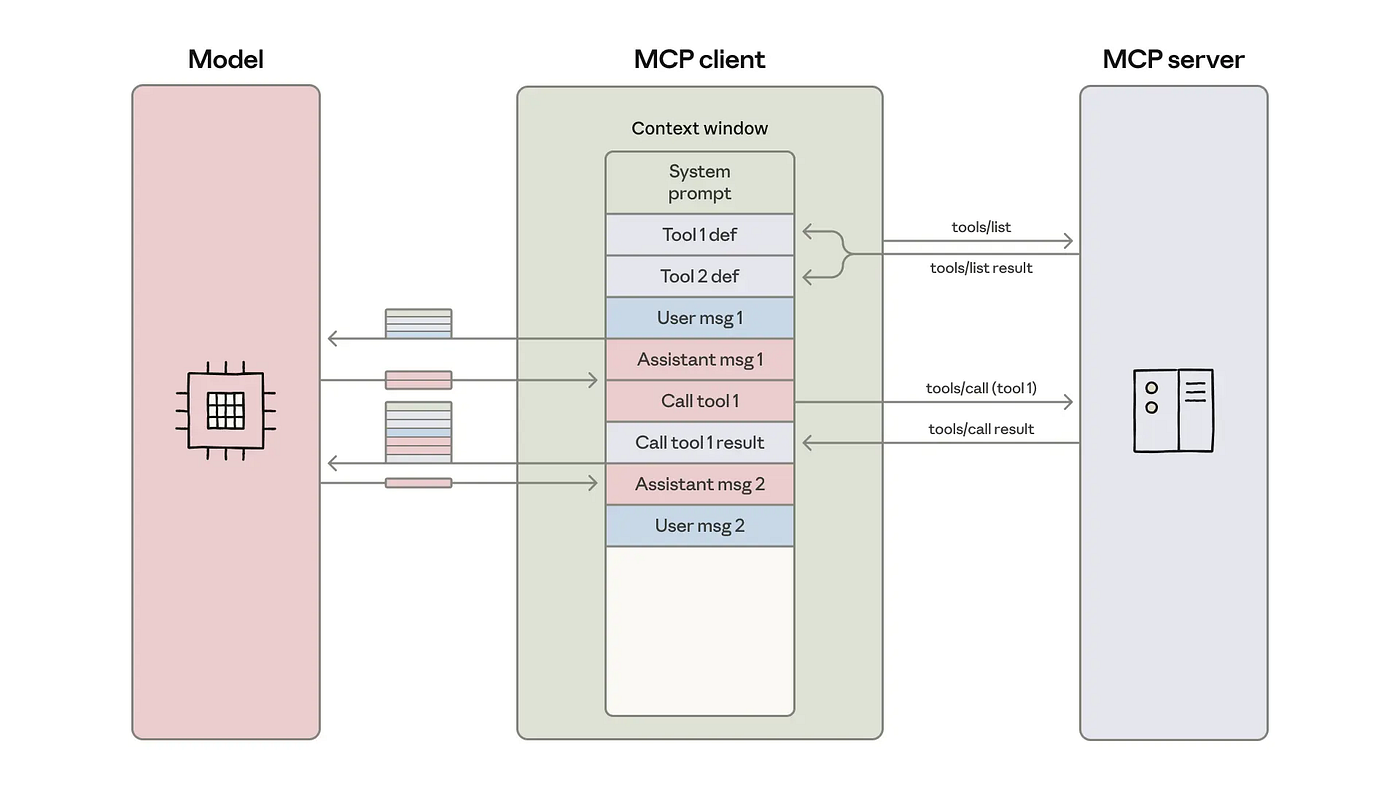

Integrating external services into AI systems, as discussed thus far, would typically require manually defining tools for each dependency used in a project. The Model Context Protocol (MCP) addresses this by standardizing how AI systems discover and interact with external tools, reducing development and maintenance effort for developers, and promoting portability. By providing a unified interface for communication, MCP increases interoperability, allowing models to seamlessly discover and interact with various sources.

MCP overview

MCP is a client-server architecture allowing AI applications to function as a client and connect to MCP servers, providing desired external capabilities. Each server exposes a set of primitives, which were discussed in the preceding sections:

- Tools: executable functions for the AI Application to invoke

- Resources: readable/queryable data sources that provide contextual content

- Prompts: templates that help structure interaction with language models

Connected clients can dynamically discover and access these primitives at runtime, reducing tool integration time and dependency coupling. In the case of tools, clients can also execute them upon discovery. While Clients usually initiate discovery and tool execution, servers can send messages back to request host model sampling, collect user input, or log messages. This structure gives agentic AI systems a consistent and controlled way to integrate external services, data, and user interaction into their execution environment.

Agentic Runtime Environments

Agentic projects are often event-driven (whether that be from clients starting an application, or some input data reception triggering a larger workflow). Short-running, sporadically invoked tasks are naturally implemented using serverless infrastructure such as AWS Lambda functions, and multi-step workflows can be further orchestrated with AWS Step Functions. This avoids idle compute costs and ensures computation scales automatically with requests, without worrying about complexity in provisioning and managing infrastructure. Furthermore, by decoupling runtimes of system components, this ensures more robust and scalable solutions.

In addition to executing pre-defined workflows, some agentic systems can leverage the ability to generate and execute code autonomously. LLMs can produce new code at runtime, which can then be executed in sandboxed environments to extend autonomy beyond pre-existing tasks, enabling dynamic problem solving. Although resource limitations must be established with this approach to maintain safety and control, as will be discussed in the following section.

Security & Observability

Having defined the components comprising agentic AI systems and mechanisms used to solve problems through environment interactions, it’s important to discuss how to control access to these systems to minimize potential damage from errors.

Principle of least privilege

A common security principle is to ensure systems only have the minimum access rights necessary to perform legitimate functions. When developing agentic projects, this can be achieved by specifying resource specific permissions and appropriately scoping authentication for service integration.

System Safeguards

In addition to permissions, developers can implement bounds and validations within tools to appropriately scope and limit access. Furthermore, technologies such as bedrock guardrails can be used to enforce custom policies exacting attributes such as privacy, truthfulness, or safety by evaluating outputs against a logical rule set.

Human-in-the-loop (HITL)

HITL is a real-time intervention process that adds intermediate checkpoints in an agentic workflow; requiring human validation and approval before proceeding with subsequent steps. HITL enables humans to identify and correct errors for high-risk operations such as writing & updating data or interacting with customers and other external sources.

Observability

Observability is another critical consideration for agentic systems. Implementations should log all agent requests, responses, and actions to enable retrospective analysis of decision paths. When feasible, agents should also record their reasoning and justifications for each decision to aid in explainability.

Conclusion

Agentic AI represents a shift from human-driven systems to systems that can autonomously perceive, reason, and execute multi-step plans in pursuit of complex objectives.

This article explored the foundational elements enabling this autonomy: contextual awareness, tool ecosystems to extend capabilities, orchestration patterns for sophisticated workflows, standardized protocols for integration, and practices to ensure safety and reliability.

The field is young and rapidly evolving. The hope with this article is that readers now have the foundational knowledge to explore concepts more deeply, contribute through hands-on experimentation, and build systems that push the boundaries of what’s possible.