Accurate by Design: Advanced Data Quality on AWS

Introduction

Data pipelines in AWS orchestrate the movement and transformation of data across various AWS services. The core objective of these pipelines is to enable efficient data processing, analysis, and storage, ensuring that data is available where and when it is needed. Maintaining high data quality throughout this process is critical; it ensures reliability, accuracy, and timeliness of the data, directly impacting decision-making and operational efficiency.

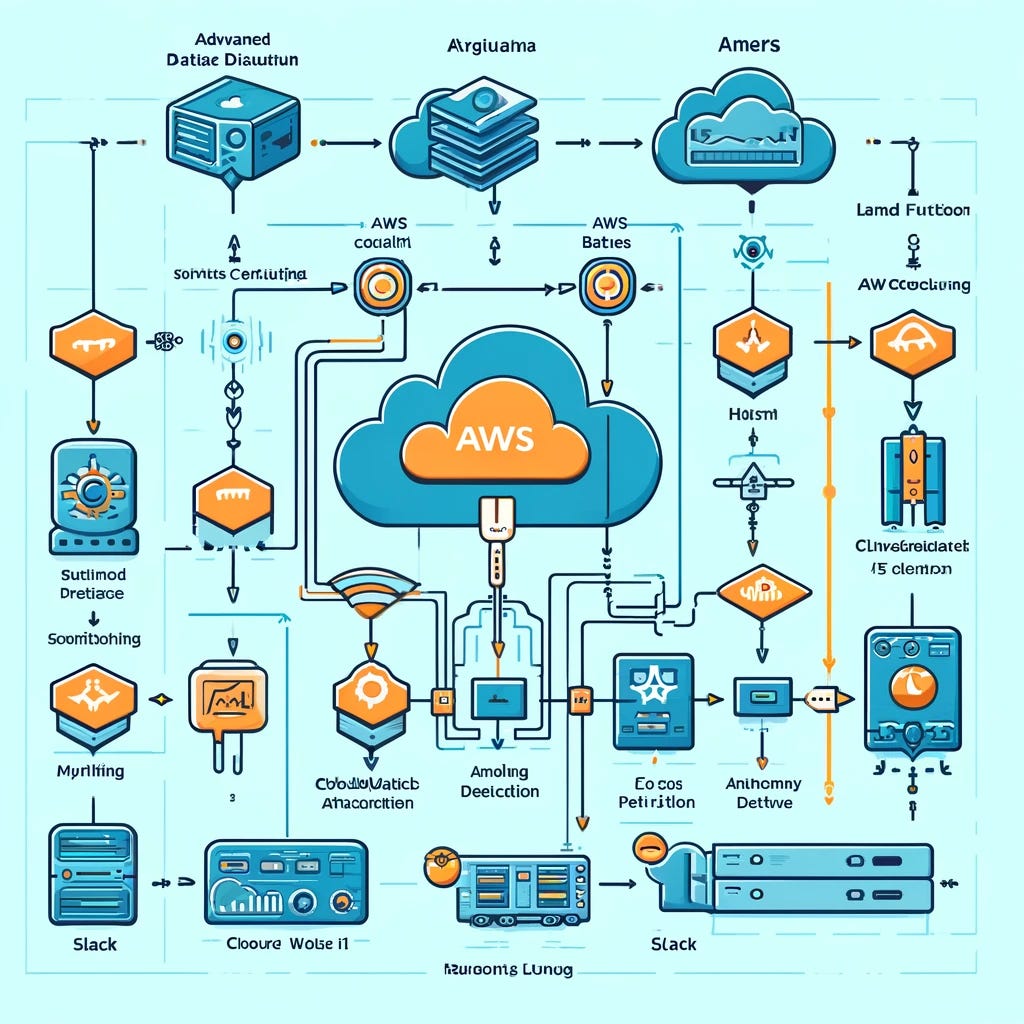

This piece focuses on an architecture leveraging AWS Step Functions, AWS Lambda, AWS Batch, Amazon CloudWatch, and Slack. AWS Step Functions coordinate workflows between services, allowing for complex data processing tasks to be executed in a managed, scalable way. AWS Lambda facilitates serverless computing, enabling quick and cost-effective data processing. AWS Batch automates batch computing tasks, optimizing the allocation of computational resources. Amazon CloudWatch monitors the performance of AWS services and applications, providing insights through metrics and logs. Anomaly detection in CloudWatch enables proactive identification of irregular patterns, signaling potential data quality issues. Slack integration offers real-time alerting capabilities, enhancing the responsiveness to detected anomalies.

Understanding and implementing these technologies effectively can significantly enhance data quality management in cloud architectures.

Section 1: Understanding the Architecture

Overview of AWS Step Functions

AWS Step Functions coordinate multiple AWS services into serverless workflows. They are essential for orchestrating complex processes that involve multiple steps, such as data processing pipelines. The primary role of Step Functions is to ensure that these steps are executed in order and handle error processing and retries as needed. For data pipeline management, Step Functions provide a visual interface to monitor the progress of workflows, enhancing control and visibility.

Lambda and Batch Jobs

Lambda provides serverless compute, running code in response to events without provisioning or managing servers. It’s suitable for lightweight, event-driven processes. AWS Batch, conversely, is designed for batch computing across AWS resources. It efficiently runs hundreds to thousands of batch jobs, optimizing resource allocation and job execution.

Lambda is often used for data transformations and real-time processing tasks due to its event-driven nature and ability to scale automatically. Batch jobs are preferred for data processing tasks that are not time-sensitive and can be executed as a batch workloads, benefiting from Batch’s queue management and scheduling capabilities.

Integration Points

Step Functions integrate with Lambda and Batch by allowing the definition of state machines that include Lambda functions and Batch jobs as states. This integration enables automated, reliable execution of data processing workflows, where Step Functions manage the orchestration of these services according to the defined workflow.

For example, a data processing workflow could begin with a Lambda function triggered by new data arriving in an S3 bucket. This function performs initial data validation and transformation before passing the data to a Batch job for more intensive processing, such as data analysis or machine learning model training. Upon completion, another Lambda function could be invoked to handle post-processing tasks, such as updating databases or sending notifications. This workflow demonstrates how Step Functions serve as the backbone for coordinating Lambda and Batch jobs in a cohesive, managed process.

Section 2: Monitoring and Metrics with Amazon CloudWatch

Introduction to CloudWatch

Amazon CloudWatch provides monitoring and observability of AWS resources and applications. It collects and tracks metrics, collects and monitors log files, sets alarms, and automatically reacts to changes in AWS resources. CloudWatch is essential for operational health monitoring and ensuring performance optimization across AWS services.

Metrics for Data Quality

Key metrics to monitor for data quality include error rates, processing times, and throughput of bytes, events, or rows. These metrics give insight into the performance and reliability of data processing jobs and can indicate the presence of data quality issues.

Custom metrics are user-defined metrics that provide additional insights specific to an application’s or workflow’s operational metrics. To use them, publish your custom data to CloudWatch using the PutMetricData API. This flexibility allows for monitoring specific aspects of data quality that are unique to your workload.

Anomaly Detection in CloudWatch

CloudWatch Anomaly Detection applies machine learning algorithms to continuously analyze system and application metrics, identifying normal baselines and highlighting anomalies without manual setup.

Configuring anomaly detection for data quality metrics involves selecting a metric, setting a namespace, and optionally customizing thresholds and exclusion periods. This feature enables proactive identification of issues by alerting when metrics deviate from expected patterns, allowing for swift corrective actions.

Section 3: Alerting with Slack

Integration of CloudWatch with Slack

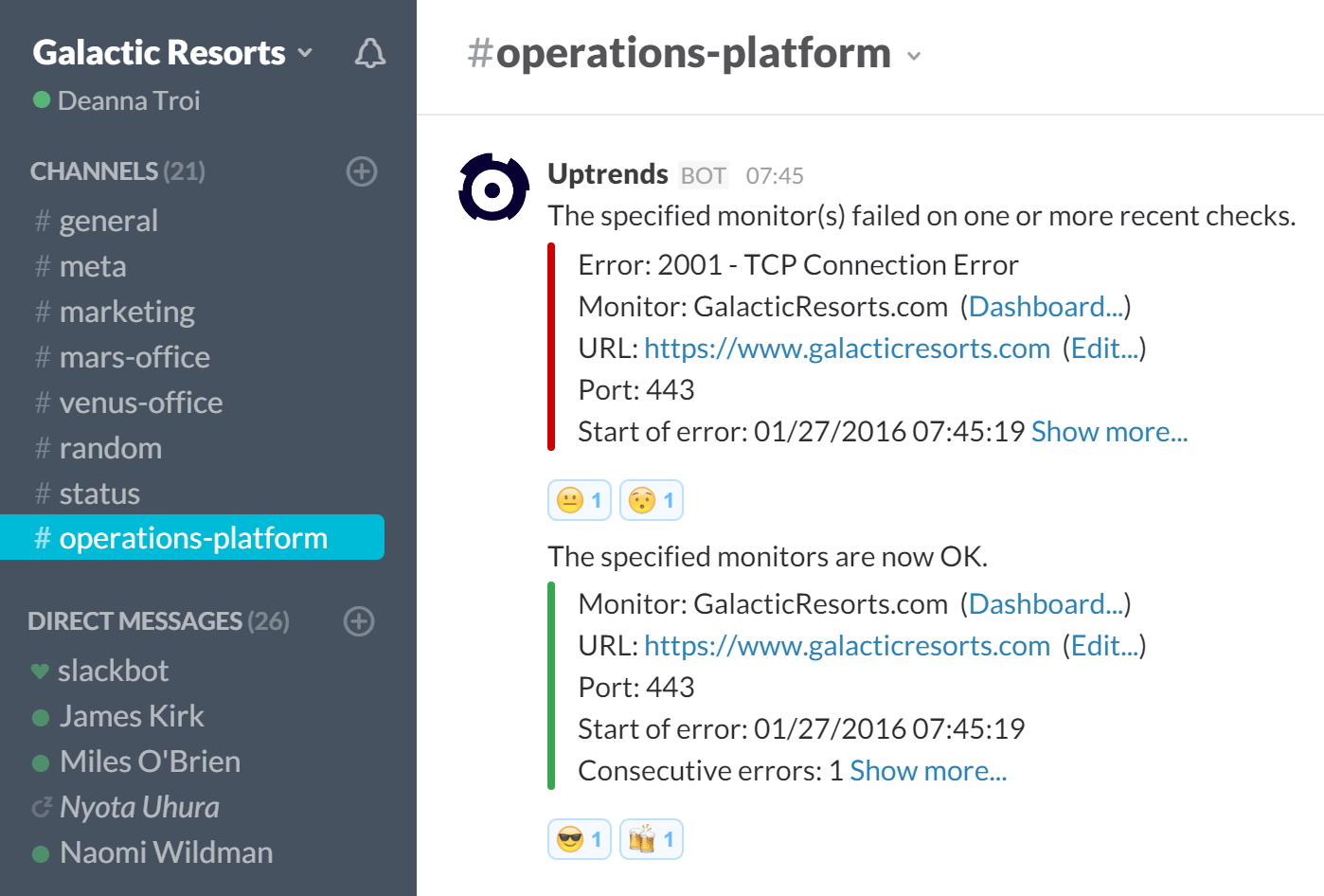

Slack notifications for CloudWatch alerts are established via AWS Lambda functions or through integration tools like Amazon SNS topics. This setup enables the direct posting of alerts from CloudWatch to designated Slack channels.

Benefits of Using Slack for Alerts

- Immediate Notifications: Slack delivers real-time alerts, reducing response times to incidents.

- Centralized Monitoring: Aggregates alerts from various sources into a single, accessible platform.

- Customizable Alert Routing: Allows for alerts to be directed to specific channels or users, based on severity or type.

- Interactive Features: Slack supports interactive actions, enabling teams to respond directly to alerts from within the platform.

Setting Up Slack Notifications for CloudWatch Alerts

- Lambda Function Setup: Create a Lambda function that translates CloudWatch alarm messages into Slack message format.

- SNS Topic Configuration: Direct CloudWatch alerts to an SNS topic subscribed by the Lambda function.

- Slack Webhook: Use Slack’s Incoming Webhooks feature to receive messages from the Lambda function.

- Alarm Configuration: Configure CloudWatch alarms to trigger the SNS topic on specific metrics anomalies.

Anomaly Detection Alerts

Examples of anomaly detection alerts include sudden spikes in application error rates, unusual drops in user traffic, or unexpected resource utilization patterns. These alerts are configured within CloudWatch based on the normal behavior metrics over a period of time.

Best Practices for Alert Management in Slack

- Channel Organization: Create dedicated channels for different types of alerts (e.g., critical errors, performance metrics).

- Alert Severity Levels: Customize alert messages to include severity levels, helping team members prioritize responses.

- Use of Threads: Encourage the use of threads for discussing specific alerts, keeping the main channel uncluttered.

- Automation of Routine Responses: Implement Slack bots or apps to automate responses to frequent, predictable alerts.

- Review and Adjust: Regularly review alert thresholds and configurations to reduce noise and ensure relevance.

This approach leverages Slack’s real-time communication capabilities to enhance the responsiveness and efficiency of incident management workflows, streamlining the process of monitoring and responding to data anomalies detected by CloudWatch.

Conclusion

Data quality is critical to operational efficiency and decision-making accuracy within any AWS-based environment. The integration of AWS services such as Step Functions, Lambda, Batch, CloudWatch, and external alerting mechanisms like Slack plays a foundational role in both establishing and maintaining this quality. These services enable precise orchestration, execution, and monitoring of data workflows, ensuring that data integrity and reliability are upheld through comprehensive metrics and anomaly detection.

Adopting a proactive stance on data quality monitoring and alerting is not optional but necessary. Early detection of anomalies and swift response to potential issues can significantly reduce downtime and mitigate the risk of data corruption. It is advisable to leverage the capabilities of CloudWatch in conjunction with Slack alerts to maintain constant vigilance over data quality metrics.

Experimentation with these AWS services to refine and adapt your data quality architecture is encouraged. Tailoring these services to fit the specific needs of your data pipeline can unearth optimizations that enhance performance and reliability. In essence, the robustness of your data quality strategy directly influences the effectiveness of your data-driven decisions. Therefore, continuous improvement and adaptation of your architecture in response to emerging data patterns and anomalies are imperative.