Demystifying The Artificial in Artificial Intelligence

The field of Natural Language Processing (NLP) has exploded in popularity in recent years. Largely because accessibility has become so simple for the public. These days developers have such simple access to large language models (LLMs) through tools like AWS Bedrock that creating a generative AI application has never been easier. With these models being so easy to access for both developers and non-technical general public it is more important than ever to understand what they are fundamentally doing.

NLP Through Probability

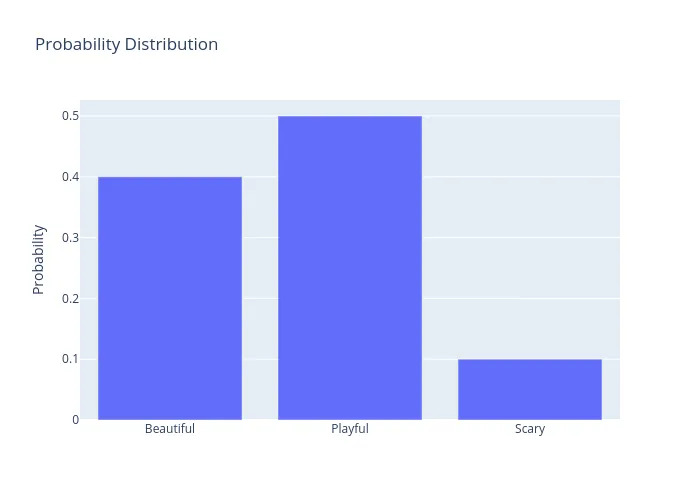

Consider the phrase “The dog is _____”. Since you have gotten this far in the article it is probably safe to say you have some understanding of the English language and as such you have some ideas on how to finish off that sentence. However, if you have no understanding of the language but rather have plenty of examples to go off of you go off of what you have seen before. If the only sentences available to learn from are “The dog is playful” and “The dog is beautiful” then you could finish off the original phrase with either playful or beautiful. Since each option shows up an equal number of times in the library of examples it is a 50/50 option. If instead of two sentences available we had 100, with the following histogram:

we could sample from the distribution shown and get the next word.

This process can be repeated to generate sentences. The following animation outlines how the distribution for the next word changes as we start to build the sentence.

This process is building a conditional probability distribution function over the next word conditioned on a string of words coming before. LLMs are not analyzing a library of existing sentences and building a dictionary of possible next words as that is not only computationally impractical, but impossible when considering the quantity of permutations available. Without the option of exactly computing the probability distribution function we can only approximate it. This is where neural networks do their part. Neural networks are whats known as universal function approximators, meaning given sufficient complexity they are capable of approximating any arbitrary function. An LLM fundamentally is a neural network that has very accurately modelled the probability distribution function over language. This extremely close approximation to the true function results in language output that is shockingly human, one might even say magical… but there is no magic, just math.

How we can use this probability?

It turns out these probabilities with words are extremely valuable and can perform useful tasks in many ways. Here are a few examples in ways they can help:

1. Leveraging LLMs for Automated Content Retrieval: A Case Study

https://www.ncbi.nlm.nih.gov/pmc/articles/PMC10192861

In this blog post, we explore the potential of Large Language Models (LLMs) and their diverse applications. Specifically, we delve into a use case involving automated content retrieval from government websites. The use case document, retrieved from a government website, showcases how LLMs can be utilized to efficiently access information from online resources. By employing automated processes, such as the one mentioned in the use case, we can quickly extract relevant content from web pages, ensuring a seamless and efficient data gathering experience. This application of LLMs has the potential to revolutionize how we interact with vast information repositories, making data collection a more accessible and streamlined task for individuals and organizations alike. This is just one of the many ways in which LLMs are shaping our future, and we at New Math Data are excited to explore further use cases and innovative applications of this groundbreaking technology.

2. Fast Chart: Revolutionizing Medical Transcription with NLP

https://fastchart.com/news/natural-language-processing-matters-medical-transcription/

Fast Chart, a leading clinical documentation service provider, has developed state-of-the-art technology that utilizes natural language processing (NLP) to achieve impressive accuracy rates. With their Speech Understanding™ and Speech-Enabled EHR/EMR solutions, Fast Chart showcases the power of NLP in understanding and interpreting physician narratives. By leveraging NLP, Fast Chart’s technology can contextually understand a physician’s dictation, regardless of accent, dialect, or cadence. This enables physicians to dictate in a natural, conversational tone, improving efficiency and accuracy in medical transcription.

The use of LLMs in this context demonstrates a profound understanding of human language, adapting to variations in speech and providing reliable results. With an accuracy rate of over 98.5% and system uptime of 99.9%, Fast Chart has established itself as a trusted partner in the healthcare industry, improving the quality of clinical documentation while reducing transcription costs. This application of LLMs in medical transcription showcases their potential to revolutionize healthcare processes and improve patient care.

3. MolGPT: Molecular Generation with Transformer-Decoder Model

https://pubmed.ncbi.nlm.nih.gov/34694798

The application of deep learning for de novo molecule generation, or inverse molecular design, is an exciting development in drug design. By representing molecules as SMILES strings, researchers can leverage state-of-the-art natural language processing models, such as Transformers, for molecular design. In this use case, the authors propose MolGPT, a generative pre-training model inspired by GPT, to generate drug-like molecules. MolGPT is trained on the next token prediction task using masked self-attention, and it performs on par with other modern machine learning frameworks for molecular generation. The model can be conditioned to control multiple properties of the generated molecules, such as desired scaffolds and property values. This allows for the targeted generation of molecules with specific characteristics, making it a powerful tool for drug discovery. The interpretability of the generative process is also highlighted through saliency maps, providing insights into the model’s decision-making. This use case demonstrates how LLMs can effectively play with the probabilities of molecular structures to generate novel drug candidates, accelerating the drug design process.

4. Watson for Oncology

https://www.ibm.com/docs/en/announcements/watson-oncology

IBM has developed a large language model (LLM) that can be applied to various natural language processing tasks, including question-answering, text generation, and summarization. The use case provided demonstrates how the IBM LLM can be utilized in a customer service chat application. In this scenario, the LLM is trained to respond to customer inquiries and provide accurate and helpful responses. The model’s strength lies in its ability to understand context and generate human-like responses, ensuring a seamless and intuitive interaction for customers. By playing probability with words, the LLM can generate a range of potential responses, select the most appropriate one, and adapt its reply based on the customer’s input. This enables a dynamic and context-aware conversation, enhancing the overall user experience and improving customer satisfaction.

5. The Power of LLMs in Protein Function Prediction

https://arxiv.org/abs/2307.14367

The field of protein function prediction has seen significant advancements in recent years, thanks to the development of innovative machine-learning approaches. In this context, Large Language Models (LLMs) are proving to be invaluable tools. The use case we’re highlighting today showcases how LLMs are being leveraged to revolutionize our understanding of proteins. The Prot2Text approach, as outlined in the provided paper, proposes a novel method for predicting protein function. By combining Graph Neural Networks (GNNs) and LLMs in an encoder-decoder framework, Prot2Text offers a multi-modal perspective. This means that it integrates diverse data types such as protein sequence, structure, and textual annotations to generate detailed functional descriptions. This holistic approach is a significant departure from traditional binary or categorical classifications and provides a more nuanced understanding of protein functions. The power of this method lies in its ability to generate free-text predictions, moving beyond predefined labels and unlocking a transformative impact on protein research and potentially, drug discovery.

Conclusion: Unlocking the Power of LLMs

In this blog post, we have demystified the artificial in Artificial Intelligence, specifically in the domain of Natural Language Processing. By understanding the mathematical foundations of Large Language Models and their probability manipulations, we can appreciate their diverse applications. From revolutionizing medical transcription with accuracy and efficiency to innovating drug design and protein function prediction, LLMs are shaping our future. As showcased in the use cases explored, LLMs are not just about generating human-like text; they are powerful tools that can handle complex tasks, provide nuanced understandings, and streamline processes across various industries.

At New Math Data, we are excited about the potential of LLMs to transform how we interact with data and information. The use case on automated content retrieval highlights how LLMs can make data collection more accessible and efficient, especially from vast repositories like government websites. Additionally, the application of LLMs in customer service chat applications, as demonstrated by IBM’s LLM, enhances user experiences and improves satisfaction through dynamic and context-aware conversations.

As we continue to explore the capabilities of LLMs, it is essential to remember that their power lies in their ability to approximate complex functions and play with probabilities. This results in their seemingly magical ability to generate human-like language output. However, as we have emphasized, there is no magic, just math.

Stay tuned as we at New Math Data continue to bring you insights and innovative use cases of LLMs, unlocking their potential and shaping their responsible and ethical integration into our world.

Author: Ben Martin, Published May 16, 2024