Introduction

In the realm of distributed computing and batch processing, operational challenges frequently arise that necessitate innovative solutions. A particular challenge we encountered involved a scenario where multiple jobs within our AWS environment were generating tens of thousands of files and storing them in an Amazon S3 bucket. Subsequently, a specific job was tasked with downloading a significant subset of these files for further processing. This approach, while initially functional, rapidly revealed its inefficiencies.

The process of downloading tens of thousands of files from Amazon S3 to the processing job not only consumed excessive time but also led to substantial resource utilization. Data transfers between Amazon S3 and the processing job spanned durations ranging from 10 minutes to 2 hours, resulting in increased operational costs, latency issues, and overall system inefficiencies.

To address these challenges and optimize our batch processing workflows, we explored various solutions and determined that integrating Amazon Elastic File System (EFS) with AWS Batch was the most viable approach. Amazon EFS offered a seamless, scalable, and cost-effective solution, enabling us to enhance processing efficiency and minimize resource overheads.

By leveraging Amazon EFS within our AWS Batch workflows, we successfully mitigated the inefficiencies associated with data transfers and reduced processing times. This comprehensive guide delves into the intricacies of configuring EFS volumes with Batch jobs.

EFS Security Group

First of all, we’ll need to create a security group specifically for the EFS volume. This dedicated security group will facilitate NFS communications, allowing the EFS file system to communicate over the network securely and preventing unauthorized access.

Additionally, for this setup to work, we assume that the

batch-security-group is already created for your batch compute environment in your AWS account and will be referenced in the EFS security group configuration.

resource "aws_security_group" "efs_sg" {

name = "efs-sg"

description = "Security Group for EFS"

ingress {

from_port = 2049

to_port = 2049

protocol = "tcp"

security_groups = ["batch-security-group"] # Replace with your actual batch security group

}

egress {

from_port = 2049

to_port = 2049

protocol = "tcp"

security_groups = ["batch-security-group"] # Replace with your actual batch security group

}

}

EFS Volume Configuration

Define EFS File System

When configuring the EFS file system, it’s essential to understand the

performance_mode and

throughput_mode options.

Performance Mode Options:

General Purpose: Offers a balance of IOPS, throughput, and consistent low latencies.

Max I/O: Optimized for high-performance, high-throughput applications.

Throughput Mode Options:

Bursting (default for new file systems): Allows file systems to burst to high throughput levels during periods.

Provisioned: Enables you to provision a specific throughput level.

resource "aws_efs_file_system" "example_efs" {

creation_token = "example-efs"

performance_mode = "generalPurpose"

throughput_mode = "bursting"

encrypted = true

tags = {

Name = "ExampleEFS"

}

}

EFS Mount Point Configuration

Next, we need to configure

aws_efs_mount_target, a resource used to create a mount target for an EFS instance. A mount target provides a way to mount an EFS file system on an Amazon EC2 instance or other resources within an Amazon VPC.

Subnet: When creating a mount target, you specify the subnet within VPC where you want the mount target to reside. This determines the network location where your EC2 instances can access the EFS file system.

Security Groups: You can associate security groups with the mount target to control inbound and outbound traffic. These security groups define the network access rules for the mount target, ensuring secure communication between the EC2 instances and the EFS file system.

File System ID: You specify the ID of the EFS file system for which you’re creating the mount target. This associates the mount target with the specific EFS file system, allowing EC2 instances within the specified subnet to access the file system.

resource "aws_efs_mount_target" "example_mount_target" {

file_system_id = aws_efs_file_system.example_efs.id

subnet_id = "subnet-xxxxxx" # Replace with your subnet ID

security_groups = [aws_security_group.efs_sg.id]

}

EFS Access Points Configuration

posix_user: The

posix_user parameter defines the POSIX identity, including User ID (UID) and Group ID (GID), for the root directory within the EFS file system.

root_directory: Within the EFS Access Point configuration, the

root_direcory parameter is pivotal in defining the initial state of the root directory within the EFS file system. Amazon EFS will not automatically generate the root directory if you omit to specify the directory ownership and permissions at the time of creation. Therefore, defining the ownership (UID, GID) and setting the appropriate permissions are crucial for aligning with intended access controls and security requirements.

resource "aws_efs_access_point" "example_access_point" {

file_system_id = aws_efs_file_system.example_efs.id

posix_user {

gid = 1001

uid = 1001

}

root_directory {

path = "/demo"

creation_info {

owner_uid = 1001

owner_gid = 1001

permissions = "755"

}

}

}

IAM Policy for EFS

Before configuring the AWS Batch job with EFS, setting up an IAM policy that provides the necessary permissions for the EFS resources is crucial. This IAM policy will define the permissions required to read and write to the EFS volume from the AWS Batch job.

Here’s a sample IAM policy:

{

"Version": "2012-10-17",

"Statement": [

{

"Effect": "Allow",

"Action": [

"elasticfilesystem:ClientMount",

"elasticfilesystem:ClientWrite"

],

"Resource": "arn:aws:elasticfilesystem:us-east-1:account-id:access-point/fsap-id"

}

]

}

Note:

elasticfilesystem:ClientRootAccess permission will be required if access point

root_directory parameter is not set or is “

/“. This is not recommended due to potential security issues.

Job Definition with EFS Volume

In our last step, we will create a job definition referencing the EFS volume and execute a Python script that opens a file /example.txt and appends the current date and time to it on a new line.

Create a

script.py file with the following content, that you can push to your ECR repo:

import datetime

file_path = "/efs/example.txt"

# Open the file in append mode and write the current date and time

with open(file_path, 'a') as file:

current_datetime = datetime.datetime.now().strftime("%Y-%m-%d %H:%M:%S")

file.write(f"{current_datetime}\n")

Please note that the provided script is a straightforward example demonstrating file access within the EFS volume. In real-world scenarios, you may need to incorporate additional functionalities and error handling as per your specific requirements.

And finally we’re ready to create a job definition:

resource "aws_batch_job_definition" "example_job_definition" {

name = "example-job-definition"

type = "container"

container_properties = <

The

authorizationConfig property ensures secure interactions with the EFS volume based on IAM policies, enhancing access control.

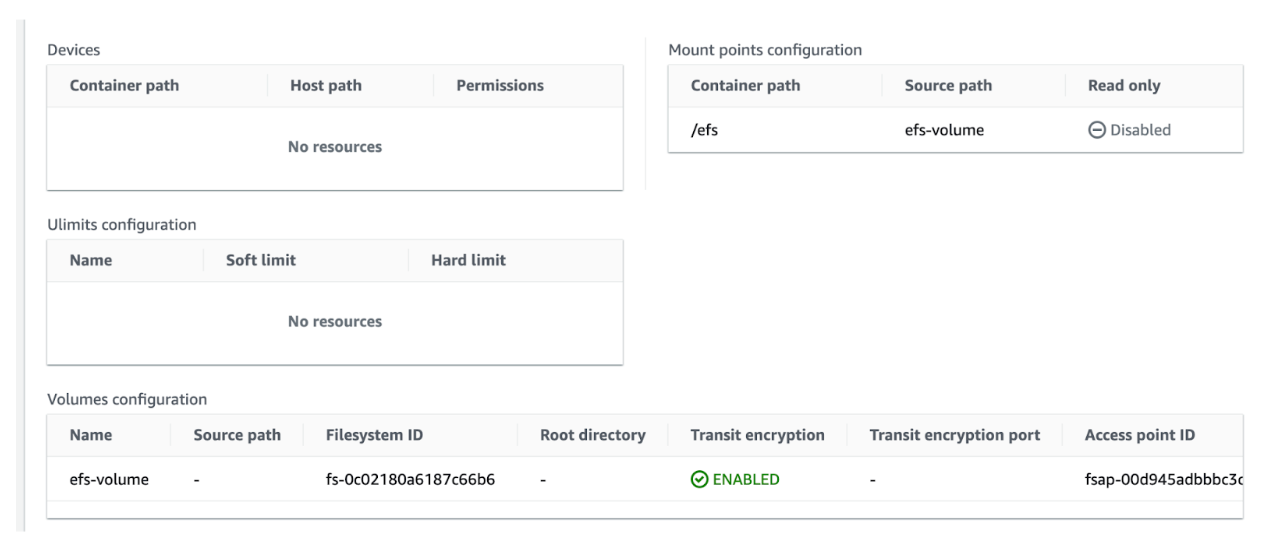

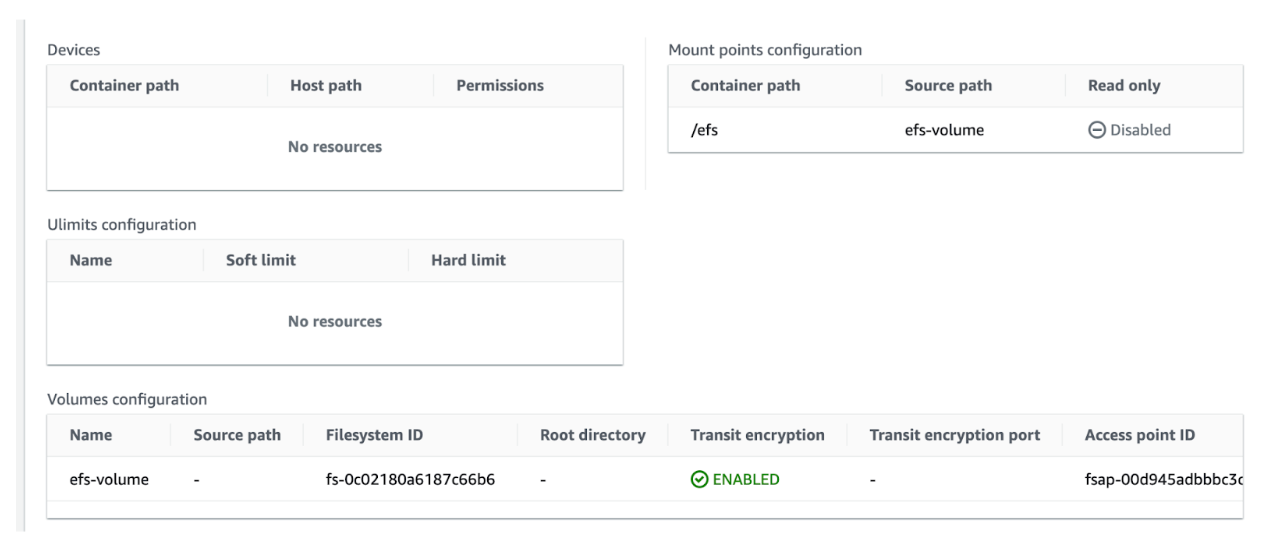

Here’s how the job definition will look in AWS console:

Conclusion

Utilizing Amazon EFS within AWS Batch workflows offers a robust solution for persisting data across batch jobs, ensuring seamless continuity and efficient data management. While EFS provides significant advantages in terms of scalability and performance, it’s essential to recognize its associated costs, which can be substantial. Therefore, when integrating EFS into your workflows, understanding, and budgeting for these expenses becomes a crucial point for consideration. You might want to consider using lifecycle policies or even setting up an automated cleanup of your volume of any files no longer used.

This comprehensive guide should have equipped you with essential insights into configuring EFS volumes, security groups, mount points, access points, and batch job definitions, empowering you to make informed decisions for your batch processing requirements.